VideoMap: Video Editing in Latent Space

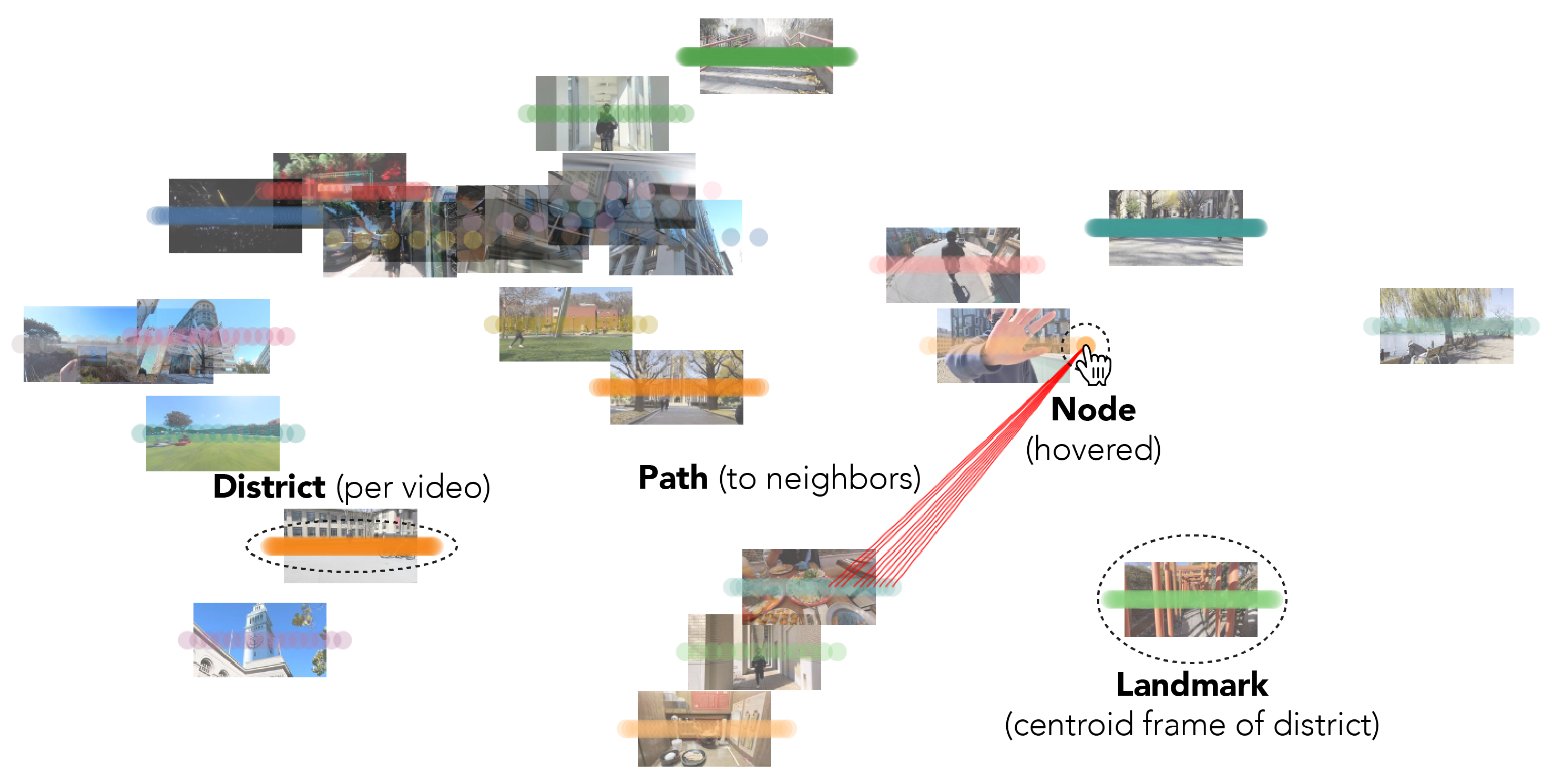

VideoMap is a proof-of-concept video editing interface that operates on video frames projected onto a latent space, enabling users to visually uncover patterns and relationships. We introduce map-inspired navigational elements (node, path, district, landmark) to support users in navigating the map.

Abstract

Video editing is a creative and complex endeavor and we believe that there is potential for reimagining a new video editing interface that provides better support for this process. We take inspiration from latent space exploration tools that help users find patterns and connections within complex datasets. We introduce VideoMap, a proof-of-concept video editing interface that operates on video frames projected onto a latent space. We support intuitive navigation through map-inspired navigational elements and facilitate transitioning between different latent spaces using swappable lenses. We built three VideoMap components to support editors in three common video editing tasks: organizing video footage, identifying suitable video transitions, and rapidly prototyping rough cuts. In a user study with both professionals and non-professionals (N=14), editors found that VideoMap provides a user-friendly editing experience, reduces tedious grunt work, enhances the overview capability of video footage, helps identify continuous video transitions, and enables a more exploratory approach to video editing. We further demonstrate the versatility of VideoMap by implementing three extended applications.

Project Panel

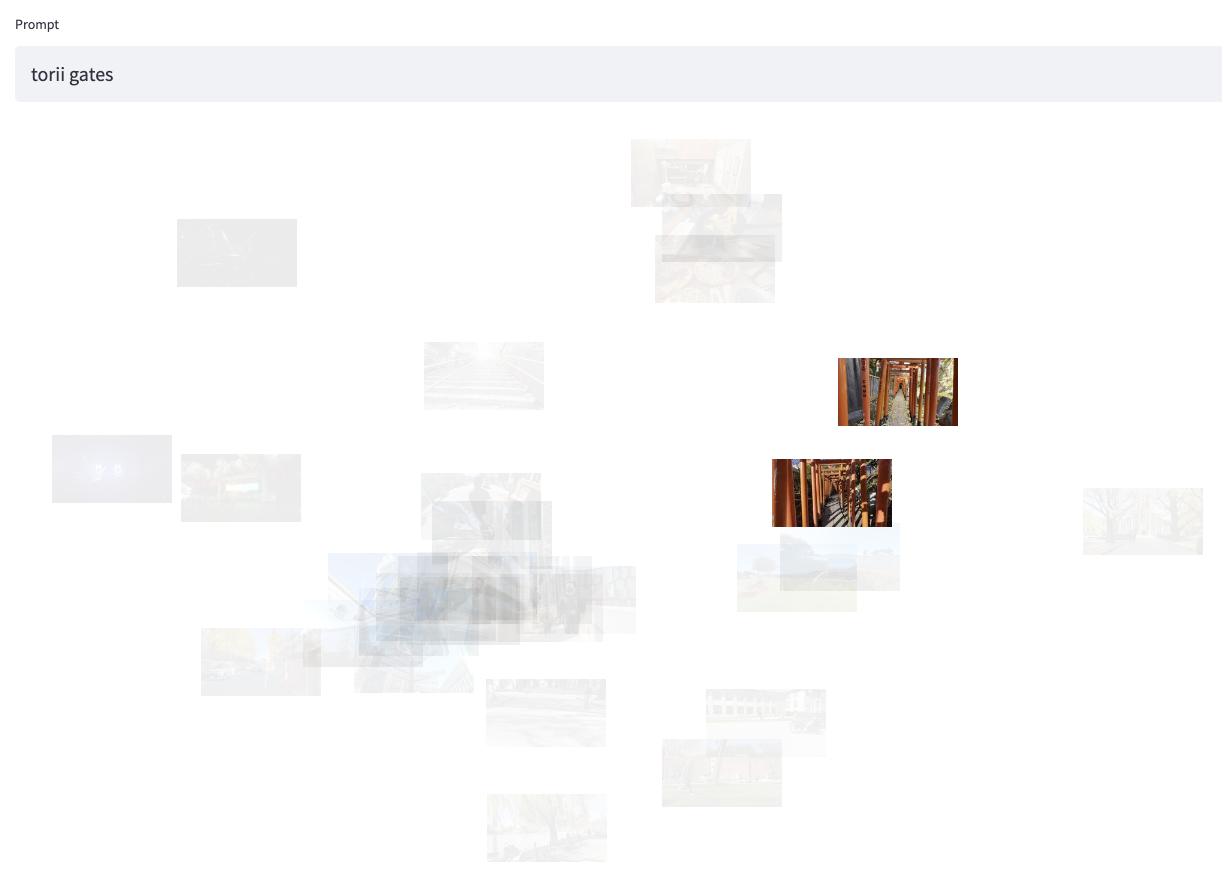

The figure shows a collection of videos organized under the semantic lens. The editor can play through the videos by scrubbing the landmarks from left to right (a). Example semantic clusters of videos are shown in (b) for several videos containing streets and buildings and (c) for concert videos.

Swappable Lenses

The editor can switch between different lenses to rearrange the layout. Using the semantic lens, videos containing semantically similar concepts are close together. Using the color lens, videos with similar color schemes are close together. Using the shape lens, videos containing objects of similar shapes are close together.

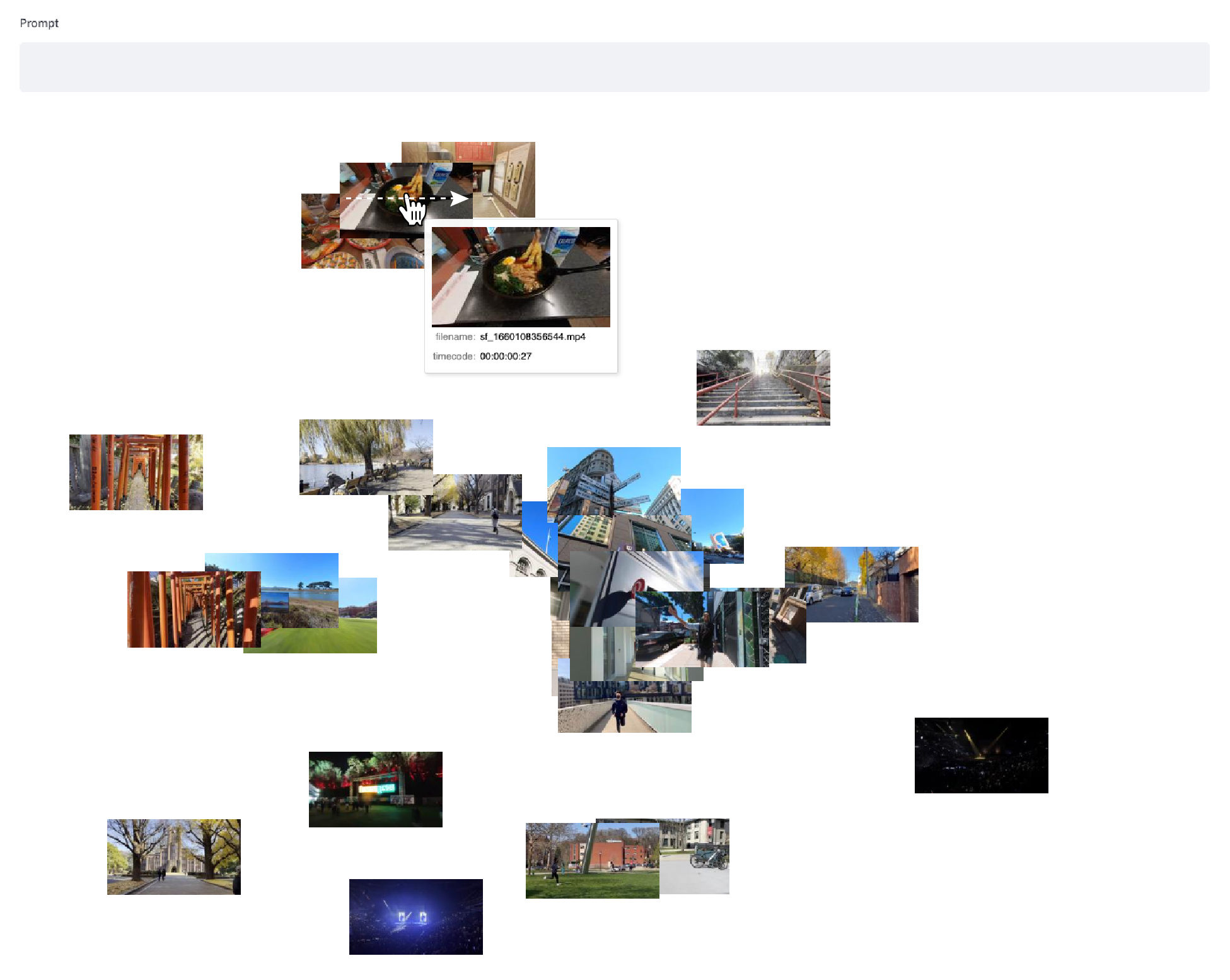

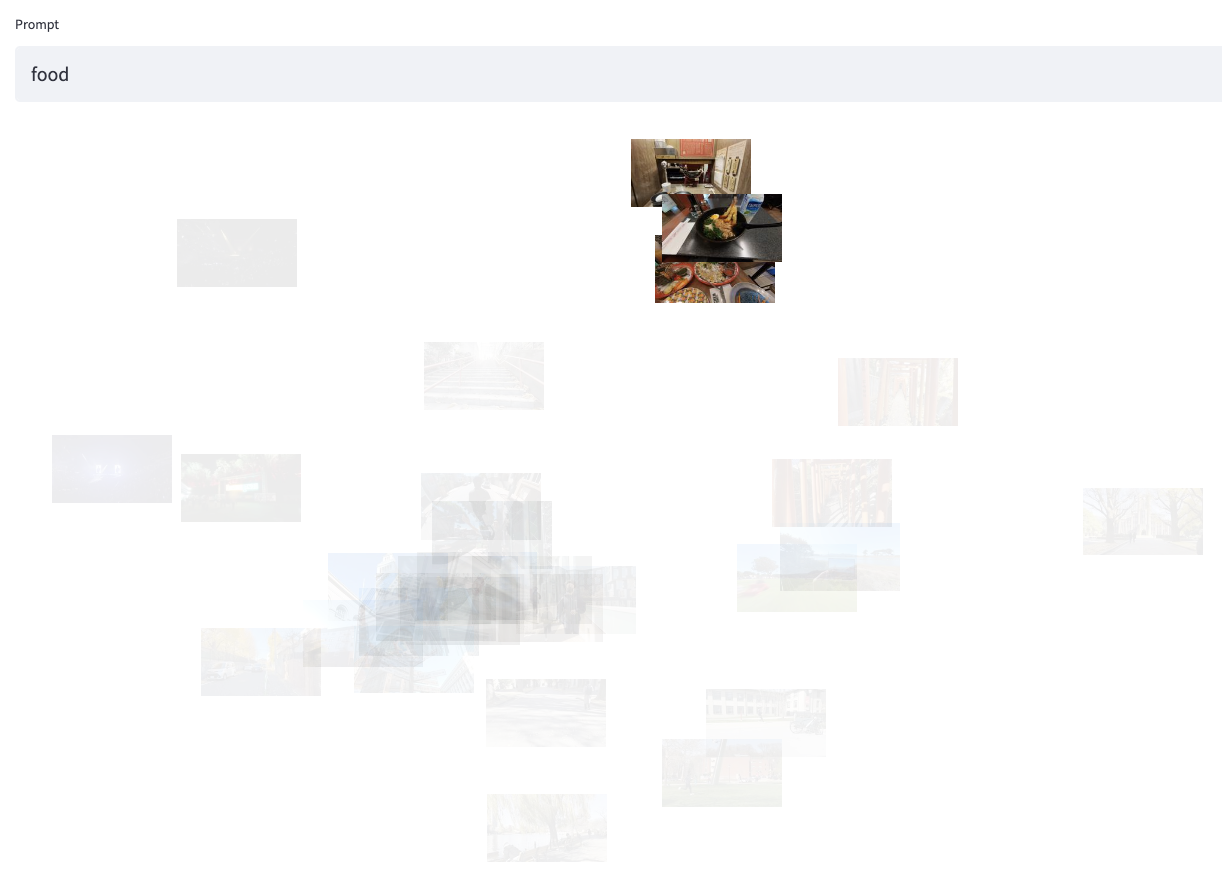

Prompts

The editor can filter videos using natural language prompts. Click on the arrows to check out different prompts.

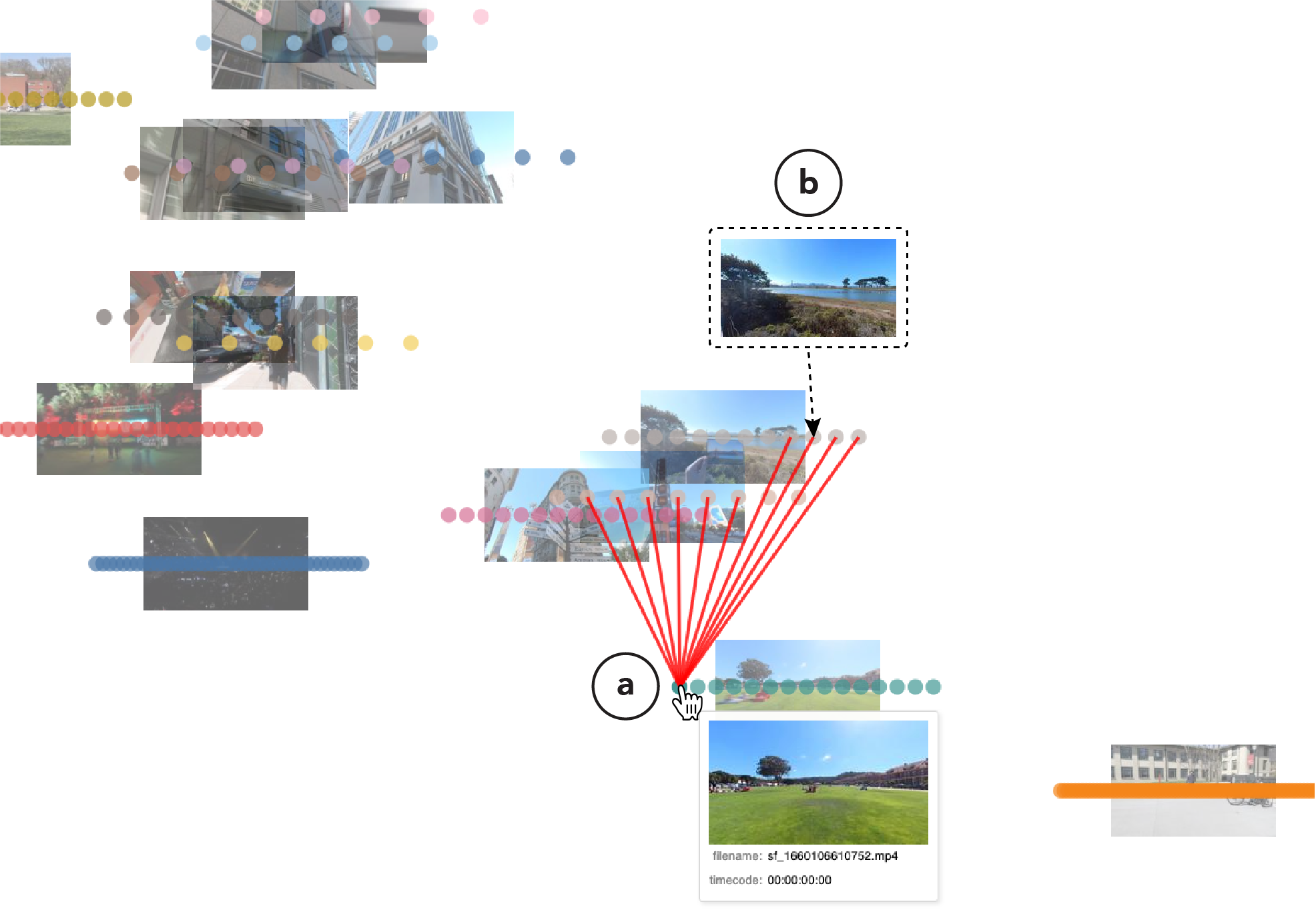

Paths Explorer

The editor can select a video frame to display ten video transition suggestions based on the selected lens (a). For example, a video frame with a similar color composition is recommended under the color lens (b).

Example transitions created by user study participants

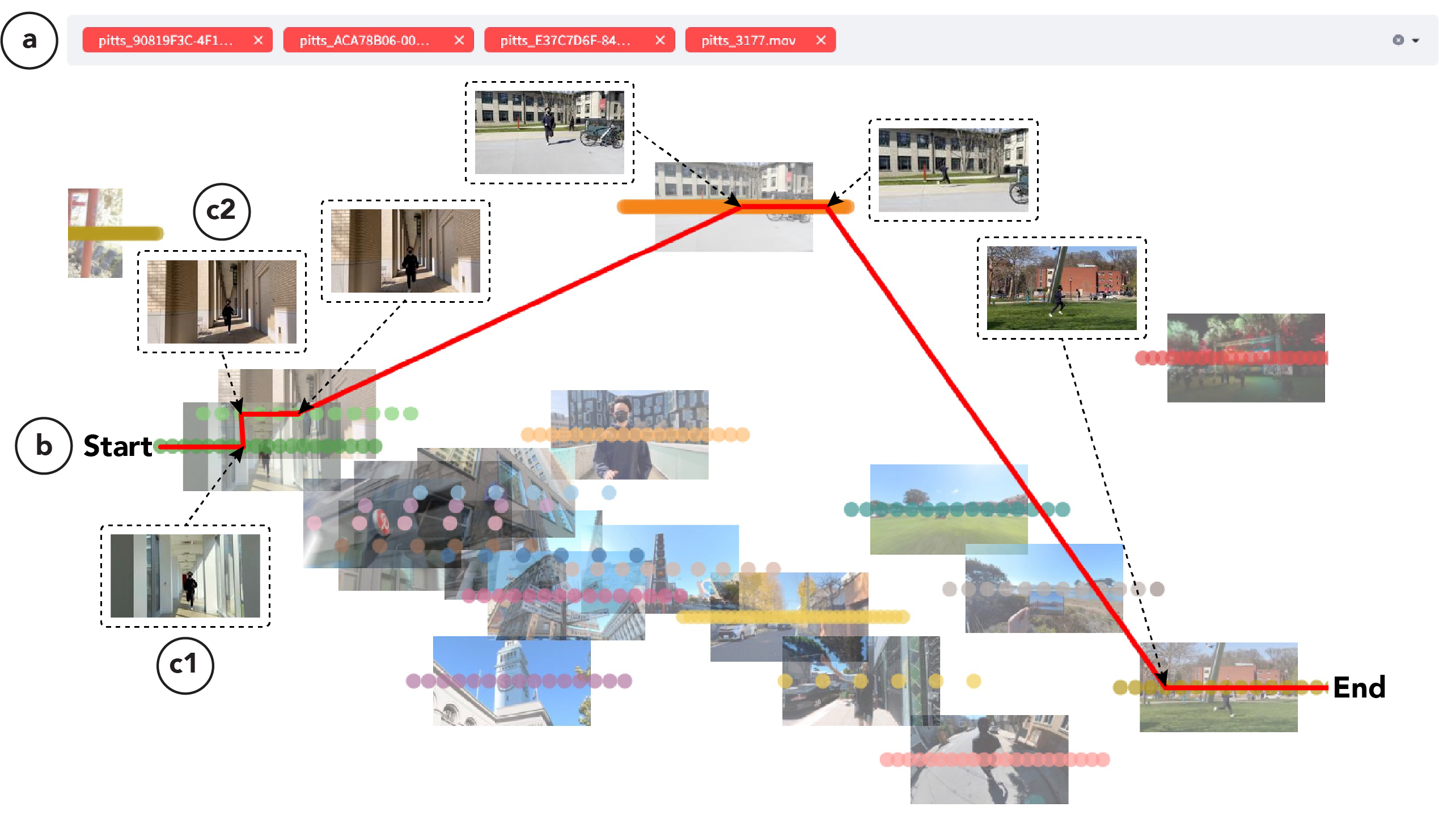

Route Planner

The editor can select several video clips to automatically generate a rough cut video based on the selected lens (a). For example, the editor can create a video of a person running with various backgrounds under the shape lens (b). Route Planner automatically finds the optimal video transitions (c1 and c2).

Example videos created by user study participants

Extended Applications

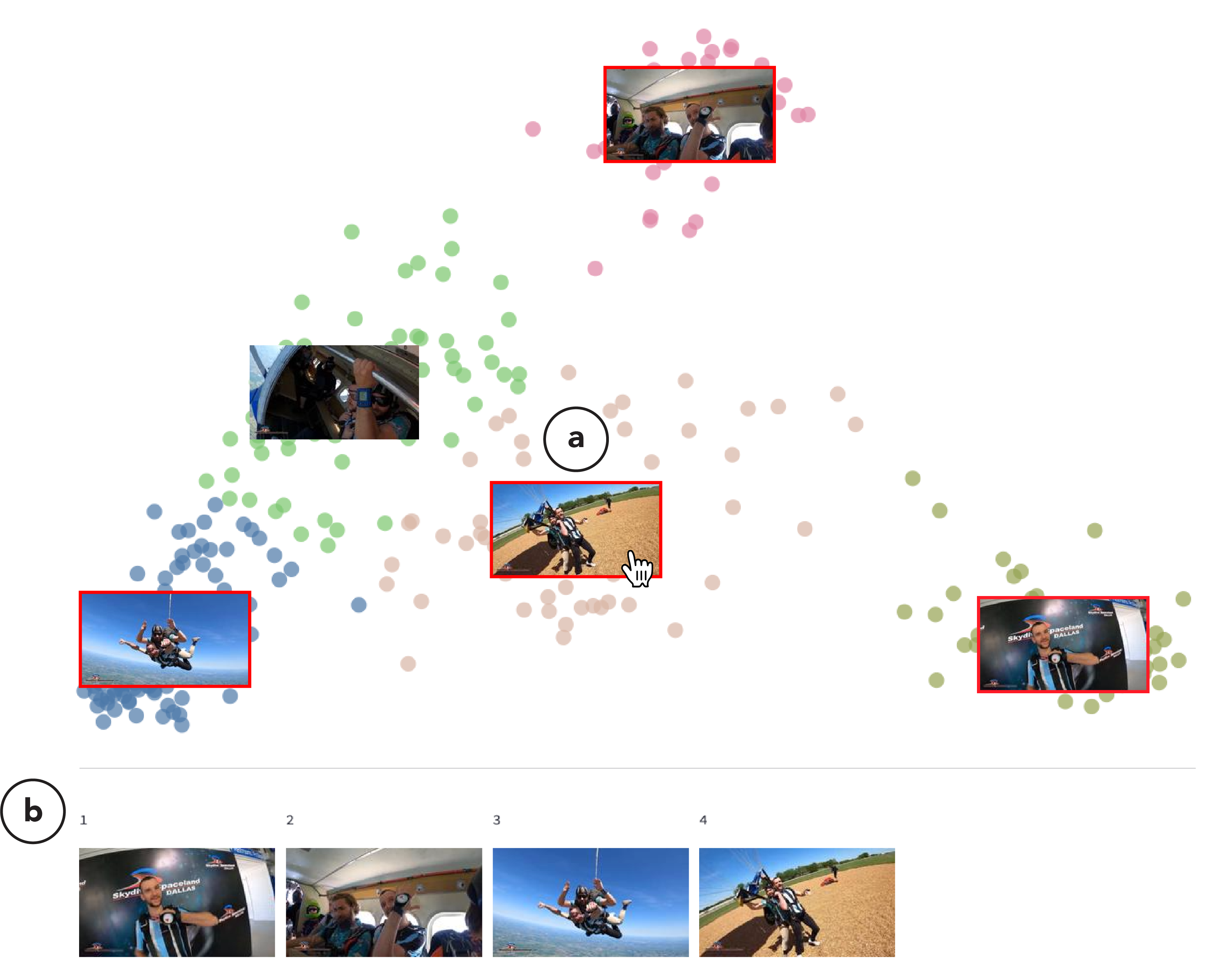

Video Summarization

VideoMap's Project Panel can be extended to create summary videos. We automatically create "semantic districts" that approximately represent the main activities of a video using k-means clustering under the semantic lens. The editor can select several landmarks to specify the activities to include in the summary video. Selections are highlighted with red borders (a) and displayed as a storyboard (b).

Video Highlight

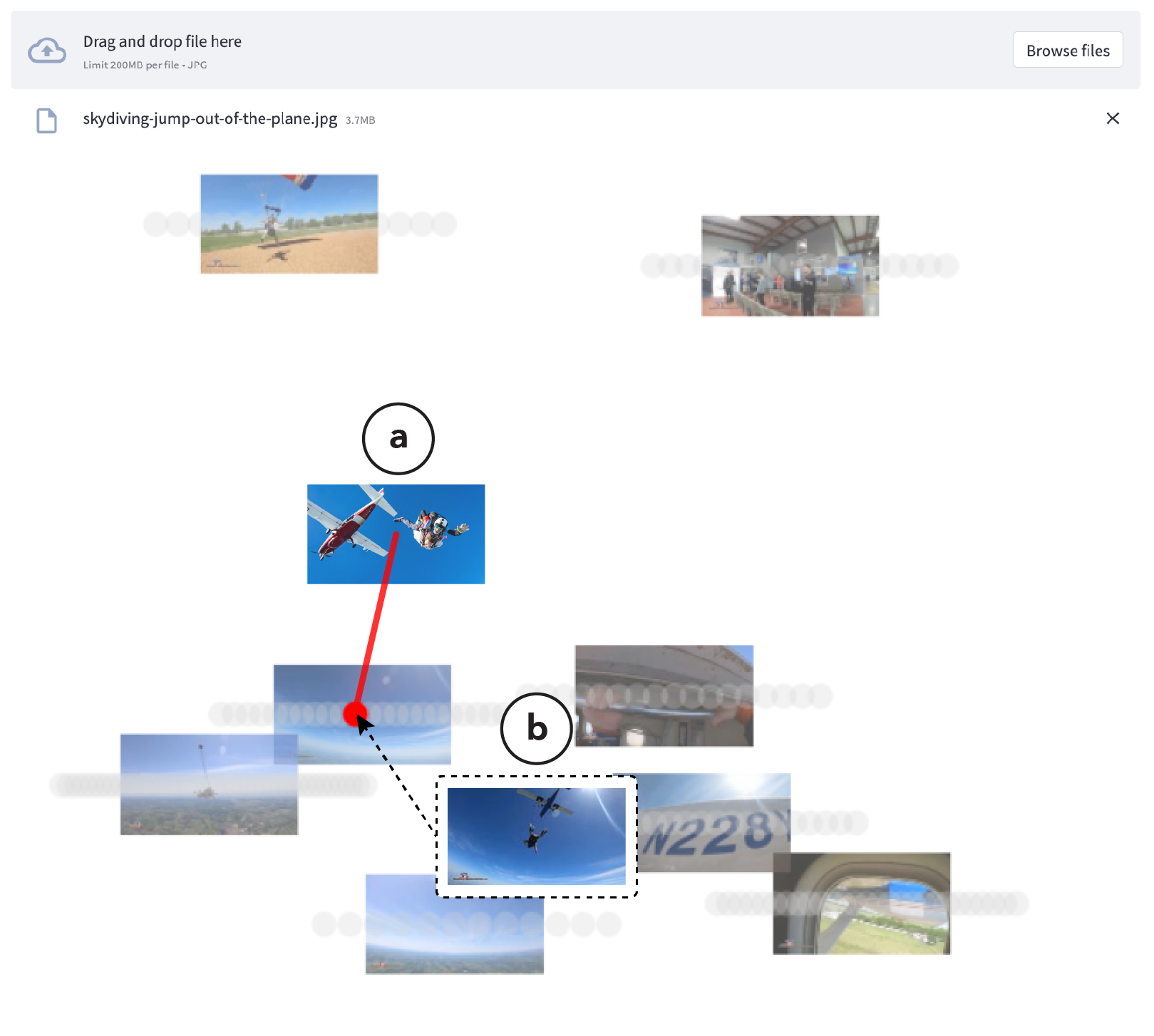

VideoMap's Paths Explorer can be extended to create highlight videos. The editor can upload a photograph (i.e., a custom landmark) depicting an activity (e.g., skydiving) (a). Our key insight is that photographs taken by photographers tend to capture the most highlight-worthy moments of an activity (e.g., when the skydiver jumps out of the aircraft). We then generate a highlight video using near neighbor video frames to the custom landmark in the semantic space (b).

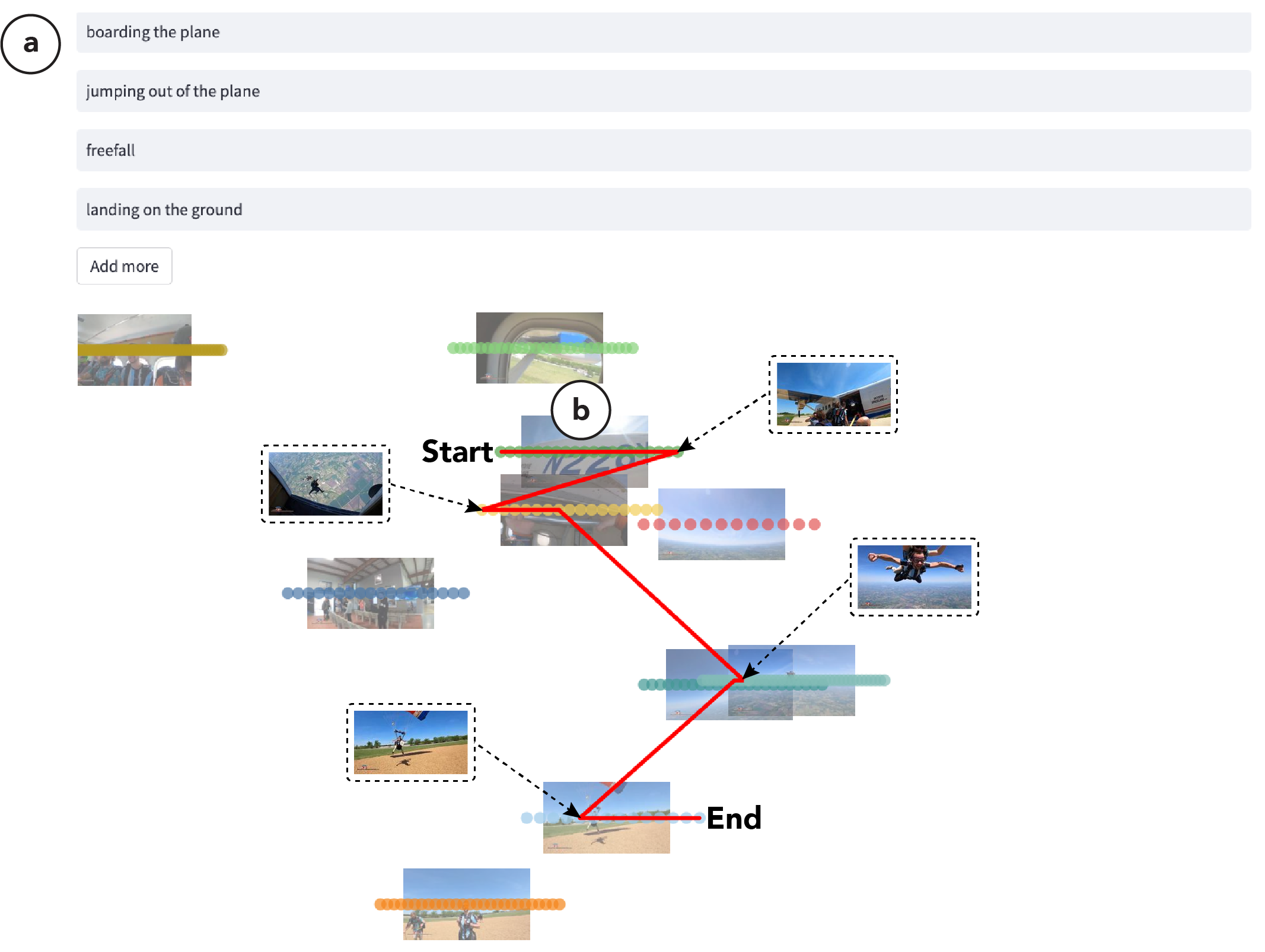

Text-Based Video Editing

VideoMap's Route Planner can be extended to edit videos using text. The editor can describe a desired video using descriptive sentences, like writing a story (a). We then match each sentence to the closest video clip in the semantic space and generate a video by finding the shortest route along the clips (b).